Microsoft Fabric, Microsoft’s latest data and analytics offering, has been a hot topic for the last few months. MS Fabric facilitates a unified platform for data engineers, data scientists, citizen data analytics, data stewards, and business users. While Fabric’s inbuilt capabilities cover most of the critical components and requirements of a successful data platform implementation, some areas are still challenging to overcome just by using Microsoft Fabric.

What are the challenges you will face in Microsoft Fabric Implementation?

- Collaboration: How can a team of data engineers work on a feature of the data platform without stepping on each other’s toes?

- Deployment: How do we deploy data warehouse code changes in a modular and governed way?

- Repetitive works: How could templates for repetitive code blocks be implemented?

- Handing Schema drifts: How do you handle when new columns are added and modified in the underlying source systems?

- Documentation: How do you document business logic and data warehouse schemas?

- Data quality testing: How to performance test data for quality and validate business logic?

- Code Quality: How do we validate code quality inside the data warehouse?

- Applying standards and maintaining consistency: How can we ensure that all developers adhere to coding standards and patterns?

What is dbt?

dbt, Data Build Tool, is a well-known tool used within the data and analytics landscape. dbt started in 2015/2016 as a transformation tool for Stitch and was later handed over to the open-source community under Apache 2.0 licensing. The open-source, free dbt offering is called dbt core. dbt also has a commercial offering called dbt Cloud, which is a SaaS offering backed by enterprise support. dbt Cloud has more capabilities than open source dbt core. This blog post only focuses on how dbt core features and capabilities can be used with Microsoft Fabric to overcome some of the abovementioned challenges.

dbt is used to implement Transformation(T) in the ETL/ETL workload. It uses the computation power of the data platform technology and orchestrates data transformation. dbt has adapters that allow it to connect to various data platforms such as Snowflake, Big Query, and Databricks. Soon after Microsoft Fabric was announced, the dbt adapter for MS Fabric was also announced, and dbt Cloud announced that it supports Fabric.

More Read: https://www.getdbt.com/blog/dbt-cloud-is-now-available-for-microsoft-fabric

How dbt works with Microsoft Fabric?

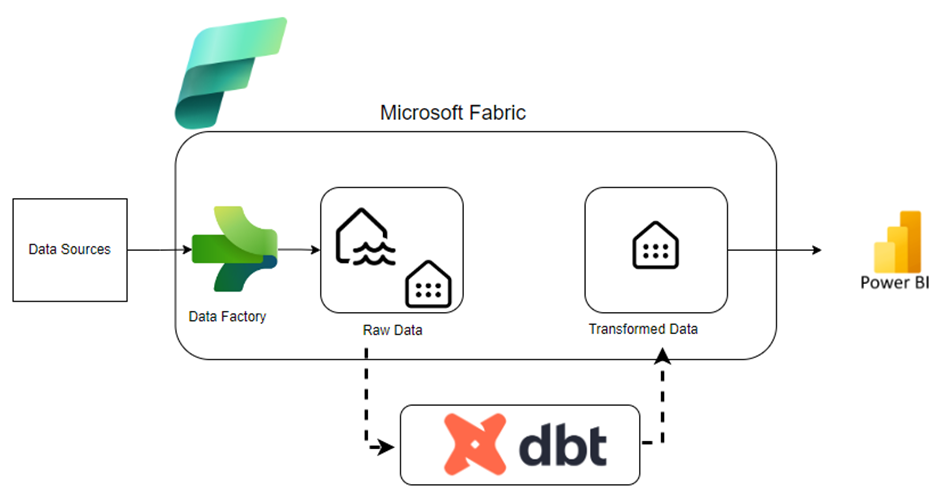

The first step of the Fabric implementation is to read data from source systems and load it into a stage. Fabric pipelines can read data from source systems and load it into One Lake. A Fabric Lakehouse can be implemented on the One Lake data files and this Lakehouse will be a source system for dbt. Dbt will read data from sources, perform transformations, and finally materialise it as tables and views in a Fabric Data warehouse.

How does dbt help to overcome limitations in Fabric?

- Collaboration

- dbt core enables VS Code or Visual Studio base local development experience. This is a similar experience to Python or Java development for software developers. You can debug and run code locally while connecting to the development data platform instances. dbt allows the development of SQL transformations in a modular way, which reduces duplication and improves collaboration. Code is stored in source control platforms such as Azure DevOps or GitHub.

- Deployment

- Deployment of data warehouse codes such as views, tables, and merge statements from one environment to another environment is straightforward in dbt. You can define multiple target environments in your profile file in the dbt project, such as development, test and production warehouse endpoints. When you execute the dbt run command and define the target environment, dbt will publish the artefacts to target warehouse points. Using variable concepts in dbt, you can define the dynamic behaviors of the code, such as changing the source lake house.

- Repetitive works

- dbt support packages, which facilitate reuse code blocks across multiple dbt implementations. There are community-built packages available for anyone to use freely. You can go and download packages from the “dbt hub”. However, the Microsoft Fabric adapter does not support all packages as of writing this post.

- Also, you can implement your own Macros, written in Jinja template language, to build reusable custom logics/ codes. Jinja allows you to bring programming into the SQL code and opens many possibilities when working with SQL-based development.

- Documentation and data lineage

- dbt has an auto-documentation feature, which generates nicely formatted documents about your data warehouse assets and schema. You can add descriptions to fields or tables and views within your dbt code. Also, dbt connects to the data warehouse, extracts metadata, and creates complete documentation about the data warehouse, including data lineage.

- Data quality testing.

- Within dbt, you can define tests. There are two types of tests in dbt. One is called a generic test. This allows parametrised queries to be defined that accept arguments to perform predefined tests. There are prebuilt tests available, such as “unique”, “not_null”, and “accepted_values”. Another type of test is called a singular test. This allows you to write any custom business logic in SQL to test your transforms and data in the data warehouse.

- Schema Changes

- Within dbt increment models, you can define “on_schema_change” property, which defines the expected behavior when underlying source fields have changed. If new columns in the source systems need to be available, you can set the value to “append_new_columns”. If the new columns need to be added and missing fields need to be removed, you can define it as “sync_all_columns”.

Conclusion

dbt is a proven data transformation tool in the market, and it enables software engineering capabilities such as DevOps, packages, and test programming to be brought to data warehousing. Microsoft Fabric is a SaaS offering that enables an organisation to implement a unified data platform. When combined, these two provide a robust framework for data and analytics. Currently, the dbt adapter for Microsoft Fabric is in the early stages of development, and it does not support all the capabilities of other adapters, such as Snowflake and Big Query. However, leveraging dbt in a Fabric implementation can overcome common problems in a data warehousing implementation in MS Fabric.